AI/ML communities across NOAA

Artificial Intelligence (AI) and Machine Learning (ML) efforts are underway across NOAA. As NCAI develops, this will be a space for those Communities of Practice to provide a vehicle for discovery and networking.

Featured NOAA AI Research

Each month the NCAI Newsletter features AI-related NOAA research from our community members. The rotator below highlights research from the current and previous newsletters. Subscribe to the NCAI Newsletter offsite link.

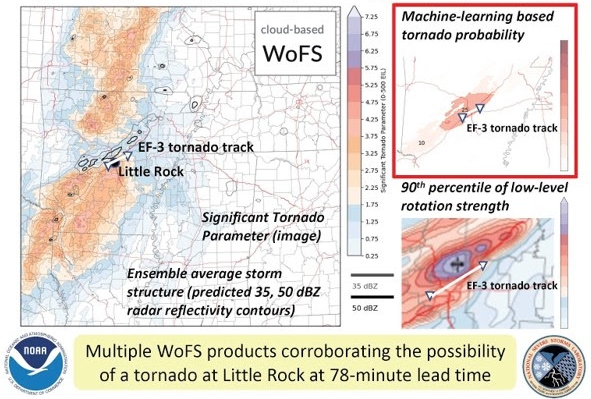

The Warn-on-Forecast System: A cutting-edge storm-scale NWP system made even better by AI

The NOAA National Severe Storms Laboratory (NSSL) and Cooperative Institute for Severe and High-Impact Weather Research and Operations (CIWRO) are leading the development of the Warn-on-Forecast System (WoFS):

a cloud-based, rapidly-updating, convection-allowing ensemble designed to support watch-to-warning (0–6 hr) operations for tornadoes, flash floods, and other high-impact weather. The WoFS is scheduled for operationalization at the National Weather Service (NWS) Unified Forecast System around 2027, but is already routinely used by many NWS Weather Forecast Offices, the Storm Prediction Center, and the Weather Prediction Center.

A major strength of the WoFS is its use of machine learning (ML) to generate probabilistic predictions of severe thunderstorm hazards (tornadoes, large hail, damaging wind). Forecasters have frequently found these ML products to be helpful during severe weather operations. Additional ML models are in development for predicting not only severe weather, but also heavy rainfall and regions where WoFS forecasts of storms will be unusually high- or low-quality. Inspired by the recent success of emerging global data-driven AI-NWP models, the NSSL WoFS team has begun exploring the concept of a data-driven WoFS, where forecasts are generated by deep learning models trained on archived WoFS output.

In February, NOAA entities involved with the WoFS received a 2023 DOC Gold Medal Award for “scientific and engineering excellence in developing a revolutionary prediction tool that provides short-term probabilistic thunderstorm guidance.” AI promises to play an increasingly vital role as NSSL and other agencies advance the frontiers of storm-scale prediction.

Can Scientists Train Machines to Listen for Marine Ecosystem Health?

What if we could detect a problem within a marine ecosystem just like a doctor can detect a heart murmur using a stethoscope? Listening to the heart and hearing the murmur tells the doctor there may be a more serious underlying condition that should be addressed before it gets worse. In an ocean world where things like climate change and overfishing have the ability to drastically alter the functionality of entire ecosystems, having a stethoscope to detect signs of major issues could really come in handy to marine resource managers. That’s where sound monitoring, artificial intelligence, and machine learning come in.

Since 2018, NOAA and the U.S. Navy have engaged in a multi-year effort to monitor underwater sound within the National Marine Sanctuary System. The agencies worked with numerous scientific partners to study sound within seven national marine sanctuaries and one marine national monument on the U.S. east and west coasts and in the Pacific Islands region. As the first coordinated monitoring effort of its kind for the National Marine Sanctuary System, SanctSound offsite link was designed to provide standardized acoustic data collection to document how much sound is present within these protected areas as well as potential impacts of unnatural noises to the areas’ marine taxa and habitats.

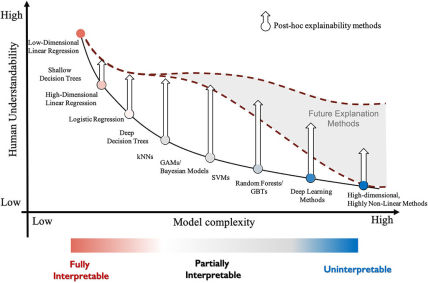

A Machine Learning Explainability Tutorial for Atmospheric Sciences

With increasing interest in explaining machine learning (ML) models, this paper synthesizes many topics related to ML explainability. We distinguish explainability from interpretability, local from global explainability, and feature importance versus feature relevance. We demonstrate and visualize different explanation methods, how to interpret them, and provide a complete Python package (scikit-explain) to allow future researchers and model developers to explore these explainability methods.

The explainability methods include Shapley additive explanations (SHAP), Shapley additive global explanation (SAGE), and accumulated local effects (ALE). Our focus is primarily on Shapley-based techniques, which serve as a unifying framework for various existing methods to enhance model explainability. For example, SHAP unifies methods like local interpretable model-agnostic explanations (LIME) and tree interpreter for local explainability, while SAGE unifies the different variations of permutation importance for global explainability.

They provide a short tutorial for explaining ML models using three disparate datasets: a convection-allowing model dataset for severe weather prediction, a nowcasting dataset for subfreezing road surface prediction, and satellite-based data for lightning prediction. In addition, we showcase the adverse effects that correlated features can have on the explainability of a model. Finally, we demonstrate the notion of evaluating model impacts of feature groups instead of individual features. Evaluating the feature groups mitigates the impacts of feature correlations and can provide a more holistic understanding of the model.

A Biologist’s Guide to the Galaxy:

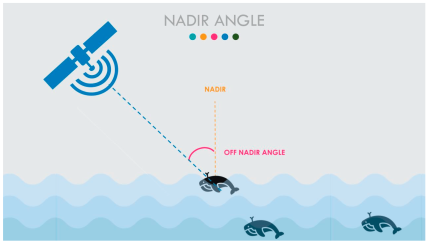

Leveraging Artificial Intelligence and Very High-Resolution Satellite Imagery to Monitor Marine Mammals from Space

Effective marine mammal conservation management depends on accurate and timely data on abundance and distribution. Scientists currently employ a variety of visual (vessel, aircraft) and acoustic (buoys, gliders, fixed moorings) research platforms to monitor marine mammals. While various approaches have different challenges and benefits, it is clear that very high-resolution (VHR) satellite imagery holds tremendous potential to acquire data in difficult-to-reach locations.

The time has come to create an operational platform leveraging the increased resolution of satellite imagery, proof-of-concept research, advances in cloud computing, and advanced machine learning methods to monitor the world’s oceans. The Geospatial Artificial Intelligence for Animals (GAIA) initiative was formed to address this challenge. In this paper, we share lessons learned, challenges faced, and our vision for how VHR satellite imagery can be used to enhance our understanding of cetacean distribution in the future. Having another tool in the toolbox enables greater flexibility in achieving our research and conservation goals.

The initiative Geospatial Artificial Intelligence for Animals brings together an extraordinary coalition of organizations to tackle the challenge of designing a large-scale operational platform to detect marine mammals from space-orbiting satellites. These organizations include government agencies (National Oceanic and Atmospheric Administration (NOAA), U.S. Naval Research Laboratory (NRL), the Bureau of Ocean Energy Management (BOEM), the U.S. Geological Survey (USGS), independent research organizations (British Antarctic Survey), academia (University of Edinburgh, University of Minnesota, and the private sector (Microsoft AI for Good Research Lab, Maxar Technologies).